Understanding FGSM Attacks

Explaining how Fast Gradient Sign Method generates adversarial examples.

We break down how gradient-based attacks work, visualize perturbations, and measure model robustness against adversarial examples.

Generate and defend adversarial images (FGSM, PGD, DeepFool) in CNNs with Streamlit demos and visualization tools.

Explaining how Fast Gradient Sign Method generates adversarial examples.

We break down how gradient-based attacks work, visualize perturbations, and measure model robustness against adversarial examples.

Simulate prompt injection, data exfiltration, and jailbreaks on LLMs. Evaluate defenses with sanitization and guardrails.

Detect anomalies in network traffic using ML and AI models. Compare Random Forest, XGBoost, and Autoencoders.

Inject malicious samples during model training and measure performance degradation under data poisoning attacks.

Framework for AI robustness testing — model fuzzing, prompt injection, and dataset integrity validation.

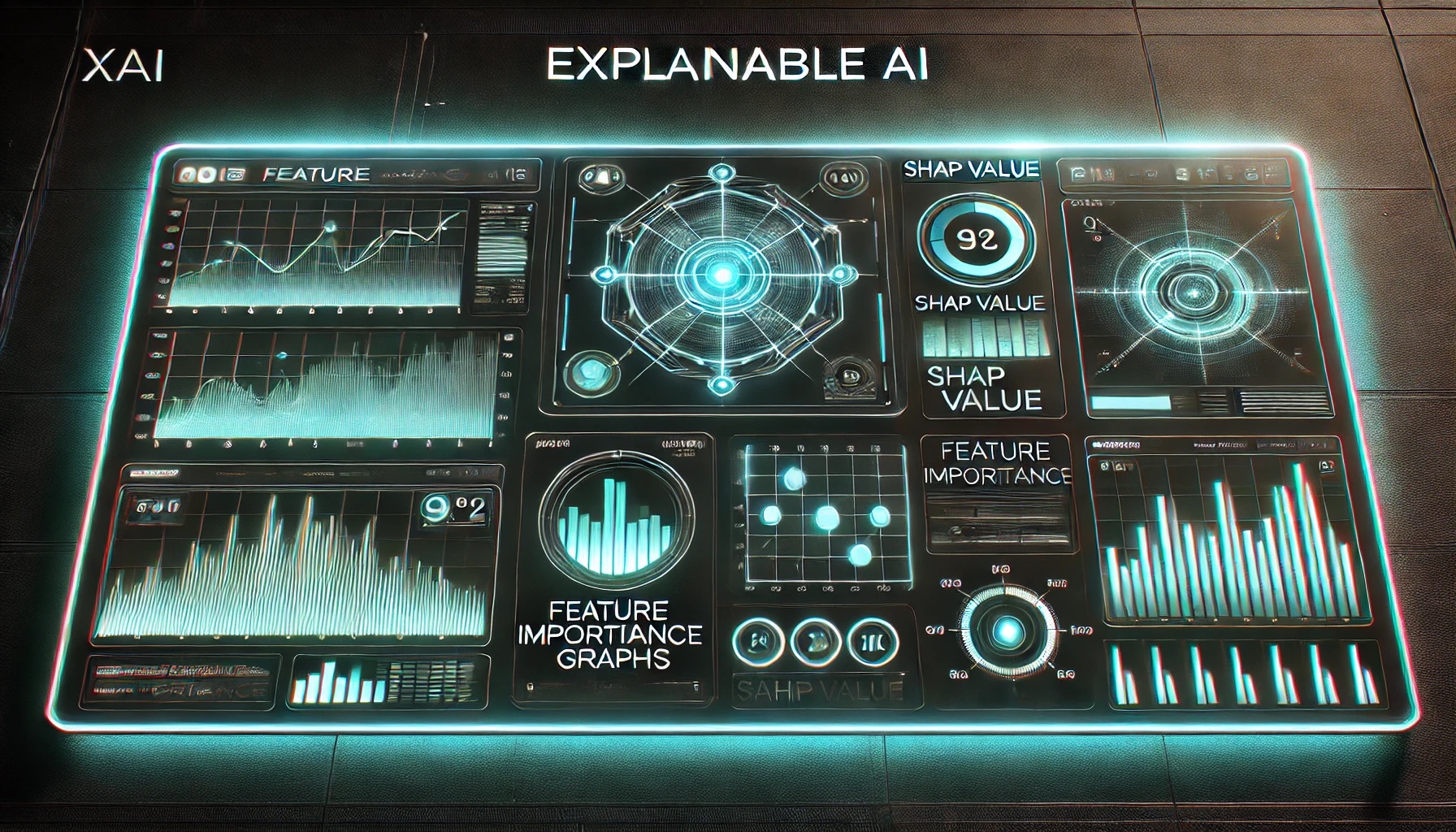

Interactive dashboard for explainability using SHAP & LIME to visualize model decision logic and feature influence.

This entry is hidden and won’t appear on the homepage.